As utility systems modernize, adding more distributed technologies and smart capabilities, many power providers are finding themselves outpaced by the sheer amount of new information the new grids present.

Utilities used to read customer meters 12 times a year. Now advanced meter infrastructure (AMI) often reports every 15 minutes. That’s thousands of times more information, and it is just a small part of what is coming at electric utilities. There are also data about billing and workforce management, operations and maintenance, and system planning — not to mention the information generated by SCADA systems and sensors used across the transmission and distribution grids.

The data deluge is a trend that affects all utilities, regardless of size or business model. In a quick check with investor-owned utilities, co-ops and municipal providers across multiple regions, all affirmed that the increase in data — and the opportunities it presents — are significant.

“TVA is facing both an increase in the volume of, and appetite for, data,” said Scott Brooks, a spokesperson for the Tennessee Valley Authority, a federally-run utility.

"The increase is an order of magnitude," echoed Tracy Warren, spokesperson at the National Rural Electric Cooperative Association, the co-op trade group. “Co-ops have been actively engaged in finding ways to manage the onslaught.”

And while many utility managers already feel inundated, there’s likely greater volumes to come.

Smart meters and advanced sensing data “will be critical as the energy industry transforms to a more customer-centric environment,” said National Grid Spokesperson Paula Haschig.

Even Omaha Public Power District, a small muni adding DERs at a modest rate, is seeing its data grow “at an ever-quickening pace,” according to spokesperson Jodi Baker.

Figuring out how to manage those data could hold the key to new revenue streams and improved grid operation, if utilities can find software tools to integrate multiple grid technologies and handle ever-escalating quantities of information. Recent advances in predictive technology and cloud computing may offer a way forward.

The rise of utility analytics

As the grid becomes more digitized, analytics is the buzzword passing many lips in the power sector.

“Utilities are struggling to cope with the growth of data and they have three general approaches,” said Research Analyst Lauren Callaway, lead author of Navigant Research’s just-released “Utility Analytics.”

Most often, “they don’t know what to do with it so it sits largely unused,” she said. Or “they start analyzing it and create more of a mess than there was to begin with.” For the few that take on analytics, she said, “it can work out well or poorly.”

Callaway’s point is verified by a survey from the Utility Analytics Institute, which recently reported that over half of utilities have “very limited use” for the data they are collecting, almost 40% are “trying to figure out what to do with it,” and only 5% to 10% have “standardized data analytics tools and processes.”

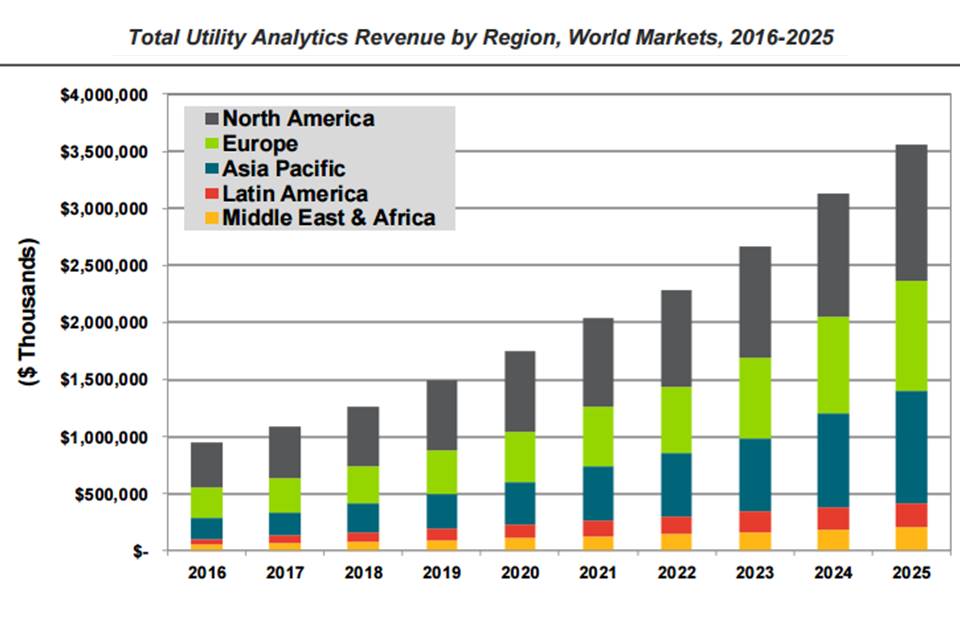

Globally, revenue for analytics is forecast to grow from 2016’s $944.8 million to over $3.6 billion in 2025, a compound annual growth rate (CAGR) of 15.9%, according to Navigant. In North America, revenue is expected to grow from $392.3 million in 2016 to nearly $1.2 billion in 2025, a CAGR of 13.2%.

But even the categories into which Big Data can be segmented for analysis are not yet standardized.

“Analytics at utilities is a very wide area and it is hard to capture the many different classes and types,” Callaway said.

For the full range of utility analytics, many of which go beyond smart meter data, Navigant uses four categories: “grid operations analytics, asset optimization analytics, demand-side management analytics, and customer operations analytics.”

Approaches are so wide-ranging that “the only must-have tool is a computer,” Callaway said. “And the only must-have staff is someone who knows how to use the analytics program.”

Analyzing information, of course, did not start with computers, but “today’s utility is faced with a confluence of factors that makes the need more urgent and the task more complicated,” Navigant reports.

“No utility does nothing with their smart meter data,” Callaway said. But using it for billing or outage management doesn’t get at the value that could come from using it for utility-wide analysis. “It is more common that they don’t have the vision to see the potential.”

Failing to see the implications of data that remain siloed within utility departments can cause inefficiencies and lead to bad decisions based on inadequate information, the paper adds.

Data yesterday and today

For many utilities, a large part of their data struggle is due to the fact that they rolled out smart meters to customers before they had the capability to completely assimilate the data, GTM Research notes in the just-released report “Utility AMI Analytics at the Grid Edge.”

An example is Georgia Power’s transition to smart meters. It was, between 2007 and 2012, "one of the largest recent sources of additional data for the company,” Spokesperson John Kraft told Utility Dive.

Utilities initially saw AMI as “low-hanging fruit” to improve “meter-to-cash processes,” GTM reports. As those benefits were achieved, utilities began developing new value streams in “energy conservation through voltage reduction, improved customer engagement, demand management, and improved revenue assurance practices.”

That process accelerated when 2009 economic stimulus funding and state mandates “catalyzed” a U.S. rollout of “nearly 30 million smart meters in less than a decade.”

As distributed resources proliferate, utilities see AMI as a means to get net load data from customer sites. Those data could improve situational awareness, enable time-varying rates, and improve billing.

As utility IT departments install hardware capable of handling larger amounts of data, utility leaders are urging engineers and vendors to help them unlock its benefits.

Significant barriers remain but, thanks to software from providers like OSIsoft, GE, AspenTech, and Splunk, improvements are being made in the ability to interface between the over 120 software platforms being used in utility siloes across the country, and enable them to handle new technologies.

“OSIsoft is the data backbone for over 1,000 utilities,” the company reports. Its PI (process intelligence) system is designed to assimilate information, compress it, digest it, and make it useful.

The PI system’s ability to render situational awareness of Xcel Energy’s U.S.-leading installed wind energy capacity has led to $46 million in savings over six years for the utility, according to OSIsoft.

Arizona Public Service, the state’s dominant electricity producer, monitors 170 MW of utility-scale solar with the PI System, reducing its need for field technicians to six for the entire state, OSIsoft also reported.

The PI System essentially gathers data on "energy consumption, production process flows, vibration, heat, pressure, temperature, asset utilization and other metrics from sensors and equipment and then delivers it up in a coherent, unified way,” a company fact sheet explains.

In a sense, the data platform is “half data management and half analytics,” said Global Industry Principal Bill McAvoy, a former Northeastern Utilities executive. “It is not a separate analytics package but it allows system operators to see hardware and respond to conditions in real time.”

Utilities always took in real-time data, but it was typically crudely presented on “one-line displays for the control center,” McAvoy said. “OSIsoft captured over 90% of the market because it could take the real-time Energy Management System data, use it as a historian, and put it up for visualization, allowing situational awareness and sharing of the information outside the control center.”

With DER penetrations on utility distribution systems now rising exponentially, OSIsoft is leveraging its position as one of the utility industry's leading historians and data compilers into a new role as a data platform for distribution management systems, McAvoy said.

Data to increase value

The explosive growth in the basic volume of utility data alone is hard to contextualize, Callaway said. “It’s terabytes and terabytes.”

But less difficult to grasp are the basic tenets of utility data strategy that have developed alongside.

“The utilities that have been successful have come up with a strategy across the enterprise by recruiting team members from different parts of the utility to develop an organizational analytics roadmap,” Callaway said.

The next step is “a strong data governance policy based on best practices” with which both in-house users and vendors align.

“Any utility that does everything in-house or outsources everything will have challenges,” Callaway said. Good implementation requires staff that know the organization, but can also work with vendors and data scientists.

Utilities are beginning to attract some IT and data science talent, Callaway said. “But people inside the utility industry often say those people are more likely to prefer workplaces with a different environment.”

Once the right people and partners are in place, Callaway said a key aim should be to define certain goals for any data management project.

Utilities’ first mistake is “letting the analysis of data turn into a technology project and not a project aimed at creating value,” she said.

Two other things lead to bad outcomes — overlooking the importance of data governance and the other is failing to consider cyber-security.

Fearing those, many utilities are avoiding the decisionmaking process by waiting until they see more mature technology, Callaway said. But a better strategy is to be actively studying how data combined with analytics can create business value.

General Electric’s Predix is among the leaders in today’s software market because its aim — using predictive technology to allow machines to make automatic decisions — can help utilities do just that, according to Keith Grassi, the Distribution and Smart Grid segment leader for GE Grid Solutions.

Predix is designed to use machine-to-machine communication to manage data from its many software historians, including OSIsoft and its in-house Prophecy product, to “enable a more real-time environment,” Grassi said. That “is the foundation of large-scale analytics because it allows the layers of business from the grid edge to the cloud to move data and interaction across the domains,” he said.

Predix now allows some automated decision making about real-time information. There is “device auto-discovery so that the software can understand what data is important as actionable information at the next level up,” Grassi said.

For a utility, data from a field edge device can be kept for use at the grid edge or sent into the distribution network. “The system builds an analytics pyramid, a decisionmaking perspective,” Grassi said. “As information goes up the value chain, a picture forms of what is going on in the real time network, allowing the system to make smarter decisions.”

With Predix embedded throughout a utility's systems, its analytic decision-making can eventually be available across the utility, from the distribution system to the cloud, he added. The intent is to create order and visibility to allow smarter asset management and long-term planning.

“Planning is where we are headed,” Grassi said. “We are starting that journey now toward domain-wide planning. The analytics component is the part we are building. The rest is already in place.”

This kind of predictive analytics is not yet happening system-wide, Grassi acknowledged. But Predix is starting to identify the information in the data that is important.

“The idea is to only bring the data across the entire energy network that provides business and decision-making value,” Grassi said. “Leave the bulk of it in the generation or distribution system to perform its tasks at the local level. The key is knowing what data at the local level to bring up.”

Data tomorrow

As much information as utilities are facing today, it likely pales in comparison to the wave of data they will face as more customers add distributed generation, microgrids proliferate and demand-management programs become more sophisticated.

To redesign, analyze, and optimize the distribution system to accommodate the level of DER coming at it will require permutations of factors “beyond the reach of the collection of single-focus systems utilities rely upon for their operations today,” according to vendor Qado Energy.

“The entire utility industry is going to be rocked by the introduction of advanced software platforms over the next 10 to 20 years that will allow them to deliver a truly efficient utility infrastructure,” said Qado Founder and CEO Brian Fitzsimmons,

While at the Massachusetts Institute of Technology, Fitzsimmons foresaw a “massive explosion in data necessary to understand and quantify the impact of high DER penetrations,” he said.

But legacy analytics tools in use by utilities would not do, he thought. They were built for a static, one-way system with one million data points that is now a dynamic, two-way system with one billion data points.”

Qado Energy’s GridUnity software platform, now licensed by PG&E, the Hawaiian Electric Company (HECO), and Southern California Edison (SCE), “is an advanced distribution system analytics platform used by utilities to do planning, forecasting, and cost calculations for high DER penetrations.”

The Qado point solution platform “sits in an elastic cloud computing environment where the top five players are Amazon Web Services, Microsoft Azure Cloud Computing, IBM, Oracle, and Google,” Fitzsimmons said.

“SCE has 4,500 distribution circuits that serve close to 5 million meters,” he said. “GridUnity allows the utility to use predictive and descriptive analytics to meet the California Public Utility Commission’s requirement that the state's IOUs understand how their distribution systems would behave with 50%, 75%, or 100% penetrations of DER.”

Using cloud computing, the Qado platform allowed SCE to see results from 100,000 simulations of high DER penetrations on its distribution system, Fitzsimmons said. The simulations demonstrated impacts over the next five years but were run in 60 minutes by calling on as many as 1,000 servers.

“That level of planning will be required by system operators all over the world soon,” Fitzsimmons said. But utilities can’t afford supercomputers. The only way they can access that computational scale for planning simulations is in the cloud with purpose-built software.

Only the most advanced utilities have moved to cloud computing, Callaway said, though she expects others to move gradually in that direction.

“It is a big undertaking and raises a lot of security issues but I recommend that utilities begin considering it,” she said.

One of the best uses of cloud computing is currently in testing, she added. “Deploying applications over the cloud is a lot less expensive and time-consuming than building them in-house for testing and it avoids burdening the utility’s communications network.”

The GridUnity platform has three other major functions. Two make up a sort of TurboTax for DER interconnection applications and are being used at HECO and PG&E to streamline processing. PG&E, Fitzsimmons pointed out, is adding almost 10,000 DER systems each month.

That is 120,000 new generation systems coming online every year, each bringing with it the complexity of a dynamic, two-way flow of electrons. There is no comparable data challenge, Fitzsimmons believes.

“Air traffic control has life and death stakes and the numbers are big, but there is another level of complexity to this because 10,000 new nodes are being added every month,” he added. The volume of data compares to Wall Street and the banking system “but they don’t have the same risk,” he added.

“With the massive volumes of DER coming online yearly, planning can’t be done over years and utilities are beginning to understand the complexity they face. Yet their data centers are consumed with the data from day to day operations. Only predictive and descriptive analytics can manage both the complexity and the risk of the coming challenge.

For now, however, utilities need to remember their analytics challenge is not about investing in certain data warehouses or a cloud platform, Callaway said. It’s about investing in what’s right for a given purpose.

“There is no standard, must-have tool or technology,” she said. “But not understanding the technology, not understanding the available potential solutions, and not understanding the value it is possible to create are the worst things a utility can do.”