One of this century's most important innovations is the emerging data analytics capabilities that are allowing utilities to use archived and real-time data to make systems more reliable, affordable and clean.

Cost-effective electricity generation from variable renewables is allowing new clean transportation and other electrification initiatives. But they will make the resulting clean energy economy dependent on a burgeoning and complex power system. Automated data analytics can provide the granular, real-time situational awareness to effectively manage it.

"Data is a linchpin of our collective energy future," Matt Schnugg, senior director of data and analytics for GE Power Digital, said in an email to Utility Dive. Data will optimize assets across "the energy network" for "navigating the digital transformation."

The use cases for data analytics are wide-ranging and proliferating. Data analytics-based weather forecasting is prompting pre-hardening of systems against extreme weather events. Data analytics are delivering new services and savings to customers through utility-led energy efficiency programs that cut customer bills and lower utilities' system costs. In addition, digital simulations are perfecting new hardware before it is installed.

"The era of siloed utilities is over, and executives are working on creating high fidelity, high quality data structured to be used throughout the company."

Jeff Ressler

Executive Director, Clean Power Research

Data analytics are also creating significant savings from predictive maintenance. OSIsoft-processed data has saved Duke Energy over $130 million by predicting transformer failures before they occurred, former Duke VP and CIO Chris Heck told an April 2018 conference.

"The unprecedented interconnectedness of systems and available computational power through the cloud are allowing new system-wide data analytics applications," Clean Power Research (CPR) Executive Director Jeff Ressler told Utility Dive. "The era of siloed utilities is over, and executives are working on creating high fidelity, high quality data structured to be used throughout the company."

No one software will be the answer, as increasing amounts of data and system integration are layered and analyzed by artificial intelligence (AI) with machine learning, he added.

That will lead to the next stage in data analytics in which a utility community pools data and computing power "for the deep machine learning AI requires," Electric Power Research Institute (EPRI) AI lead and VP for Transmission and Distribution Andrew Phillips, told Utility Dive. This will allow "shared, curated data and a secure platform to develop solution algorithms."

Data analytics can ultimately lead to a decentralized network that allows peer-to-peer energy transactions in a connected community, energy sector analysts told Utility Dive. But utilities must first fully assimilate and integrate the data and its capabilities.

Digitize, digital, integrated

The first step toward a fully digital utility is the use of operational and third-party data "on a day-to-day basis," GE's Schnugg said. To use the "disparate and often siloed" data effectively, it must be brought into software that can process "hundreds of gigabytes of data per day" and perform "near real-time analytics on individual system assets and on the overall network."

While 91% of utility leaders see such data use as "crucial to the future success of their utilities," only 23% said their companies are making "capital expenditure decisions based on predictive analytics," according to a survey of 150 utilities released March 1 by energy research firm Zpryme and ABB.

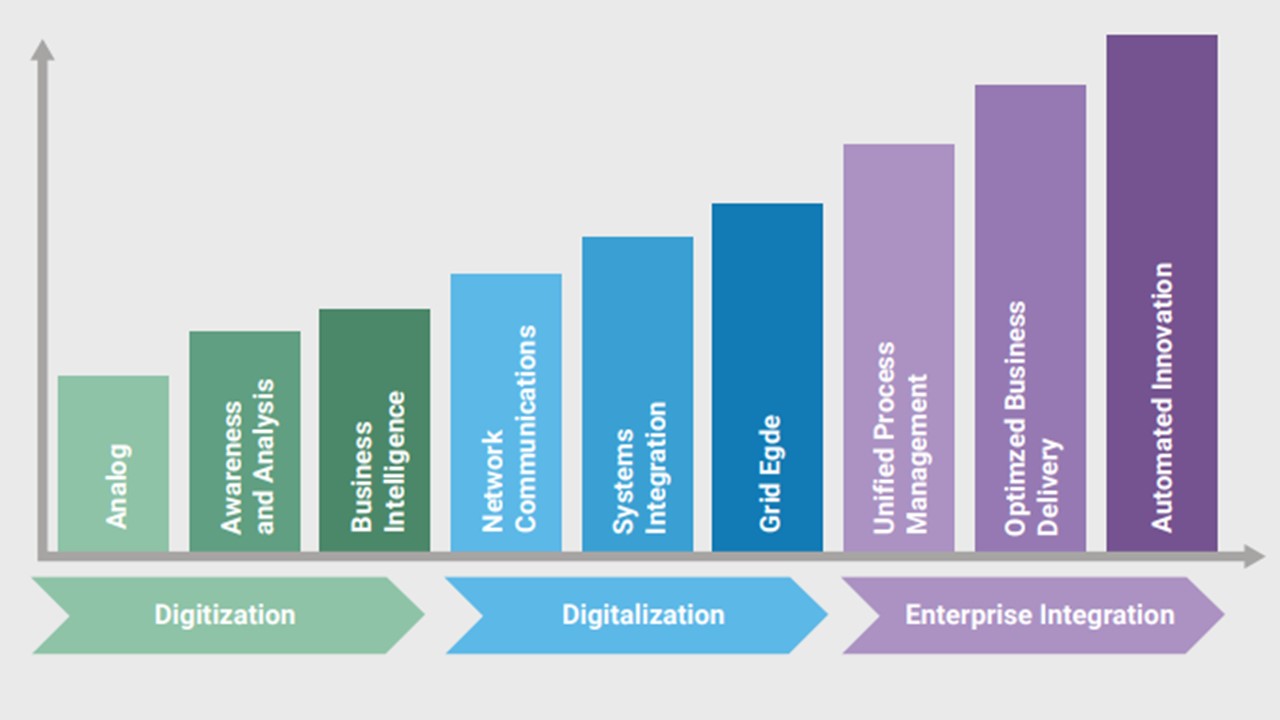

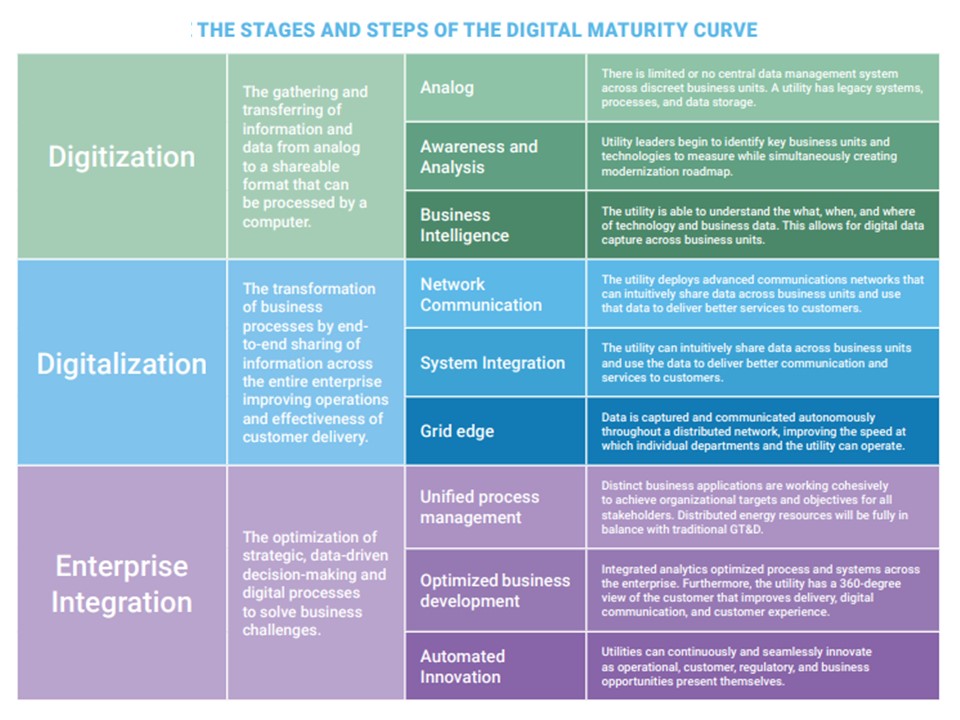

Zypryme's survey presented a three-stage, nine-step "Digital Maturity Curve" along which utilities can progress from "digitization," which is assimilating data, to "digitalization," which is using data. In "enterprise integration," the last stage, utilities will "communicate, monitor, compute, and control grid and customer operations with real-time intelligence and situational awareness," the survey reported.

Moving beyond the digitization stage will enable a new business model, the survey added. But that will necessitate spending billions of dollars over a decade for automated communication networks, internet-of-things (IoT) devices, distributed energy integration, and AI.

Finally, enterprise integration — in which data analytics is fully automated and integrated throughout the utility company — will optimize "decision-making, safety, security, sustainability, and reliability," the report added. Automated utilities can then take on "the business challenges of aging infrastructure, a regulatory system in flux, distributed energy resources, and the convergence of information technology and operations technology."

"It is not yet clear AI is will be mature enough in the next five years to ten years to allow for full enterprise integration."

Chris Moyer

Director of Research, Zpryme

But that will require computational advances that have not been achieved yet.

Data analytics is "the core of enterprise integration, but the industry as a whole is just at the end of digitization and the start of the digitalization," Zpryme Director of Research Chris Moyer, a survey report co-author, told Utility Dive. "It is not yet clear AI is will be mature enough in the next five years to ten years to allow for full enterprise integration."

Only as utilities gradually pilot "intelligent systems, AI, and communication networks" will it become clear that the return on investment justifies expenditures for those technologies, the survey acknowledges.

Regulatory reforms will also be necessary to allow utilities to benefit as much from the operating expenses of cloud-based expenditures as they do from capital expenditures for hardware, Moyer added.

But when enterprise integration is achieved, decision-making with AI can make a "self-healing grid" more reliable as it becomes more complex, the Zpryme survey reported. It can also optimize "the customer experience" and allow "a value-added services business model."

This will not prevent addressing the physical and cybersecurity risks found in the most recent Utility Dive industry survey to be of great concern to utility executives, Zpryme added.

Some utilities have already begun working toward the digitalization stage by developing uses for data that require more sophisticated and system-integrated analytics, Moyer said.

Exelon Utilities has developed 120 use cases for system-integrated data analytics "and the pipeline is growing," Utility Analytics lead Brian Hurst told Utility Dive.

Use cases

Use cases for data analytics can be divided into asset-based analytics applications and network-based analytics applications, GE's Schnugg said. Proactive maintenance of assets or systems can reduce the costs of failures and enhanced network situational awareness can better locate assets and enhance energy theft detection.

Asset-based analytics applications address "a single asset or a small number of highly valuable assets in a fleet" to either extend their life or to optimize their performance, he said. They rely largely on algorithmic analysis of sensor monitoring.

Network analytics applications "trade the depth of knowledge of a single asset for the massive breadth of an entire energy network," Schnugg said. Instead of the performance of individual assets, they optimize "the interaction of millions of assets, or nodes, in an extended network."

Exelon and GE have partnered on network analytics to improve storm readiness, he added. "We conservatively estimate that it saves $590,000 per 500,000 [utility] meters, per year. This primarily stems from outage prevention."

Siemens' Mindsphere platform for big data supports both asset and network analytics, including "grid control, grid applications, grid planning, and analytics" use cases, Siemens Chief Policy Officer for the Digital Grid Chris King emailed Utility Dive.

Using extensive "data mining and analysis," Siemens can identify the ideal location, timing, sizing and other characteristics of grid infrastructure to guide a utility to the most cost-effective investments, King said. It can also identify locations where utility expenditures should be in Non-Wires Alternatives (NWAs). And it can efficiently integrate utility-scale renewables and distributed energy resources (DERs).

"An increasing fusion of tools and infrastructure can digest and analyze system and customer data," CPR's Ressler said. CPR's PowerClerk tool is used by over 40 utilities to manage and automate interconnection programs, but the data it produces "can also be used by CPR's WattPlan to allow utility planners to make intelligent long-term planning decisions about DERs."

OSIsoft is largely focused on asset analytics. It captures data from infrastructure across utility systems "structures it to make it understandable and actionable," OSI IoT analyst Michael Kanellos told Utility Dive. "Going forward, data is going to be the lifeblood of every utility."

In addition to saving Duke Energy $130 million on transformer maintenance, OSI data helped DTE Electric reduce outages by 6.6 million minutes per year, according to a 2017 OSI conference presentation by DTE senior software engineer Cameron D. Sherding.

The company's detailed data on wind and solar projects makes renewables more cost-effective, Kanellos said. Xcel Energy, Sempra Energy and others are feeding OSI data to algorithms to increase wind and solar plant output and reduce maintenance costs, which lowers their levelized costs.

"We were able to use machine learning on billing, smart meter data and other data sets to better understand when we had energy theft issues across our systems."

Catherine Hope Bulter

Spokesperson, Duke Energy

While Duke Energy has saved millions on maintenance through data analytics, its most cost-effective use case has been energy theft detection, Duke spokesperson Catherine Hope Butler emailed Utility Dive. "We were able to use machine learning on billing, smart meter data and other data sets to better understand when we had energy theft issues across our systems."

New approaches to locating energy efficiency and to engaging customers are beginning to show what network analytics will be capable of.

Demand response necessarily focuses on reducing utility peak load at times and locations of system stress, Paul Schueller, CEO of demand response and energy efficiency portfolio aggregator Franklin Energy, told Utility Dive. "But data analytics allows targeting energy efficiency measures where and when the utility system needs them, and that is new."

Franklin's NGAGE platform helped New York City's Consolidated Edison reduce the cost of a system upgrade from $1.2 billion dollars for new infrastructure to $200 million with an energy efficiency-based NWA, Schueller said.

Customer engagement might be ahead of other use cases because utilities are working faster on systems to provide better customer support and improve the customer experience, Moyer said.

Data analytics can identify each customer's propensity to adopt different technologies or behaviors, software provider EnergySavvy's senior VP Michael Rigney told Utility Dive. National Grid is using EnergySavvy's Utility Customer Experience platform to more effectively reach low-to-moderate income customers.

The limiting factor in data analytics is not server storage or processing power, but "the ability to manage the data, interpret it, and use it to make predictions," Kanellos said. The biggest uses of OSI data have so far been limited to asset analytics, "but the newest application is creating new revenue streams by sharing data between utilities."

Nuclear plant owners looking to cut the cost of operational failures asked EPRI to use pooled historical data from 25 units to predict failure rates, EPRI's Phillips said. "There was much more strength in failure predictions using such a large pool of data."

EPRI subsequently coordinated data from 45 utilities on 75,000 transformers' failure rates, he said. "That amount of granular analysis has proven much more useful in making investment planning decisions," Phillips told Utility Dive.

The vision

Data analytics is already improving utilities' asset performance and is beginning to improve customer engagement. But the automated and integrated system that will enable peak performance, energy networks, and connected communities is still to come.

The next step is "enabling advanced analytics" and incorporating it into AI processing "at hyperscale," GE's Schnugg said.

"The big data journey will be fundamental in the rational and orderly transition to the industry vision of very high renewable and distributed energy penetrations," Siemens' King added. Big data will be the "glue" that integrates them into a "stable, reliable, safe, and low-cost" system.

To get there, utilities need to add "21st century digital professionals" to the people who now operate the grid, Zpryme's Moyer said. Duke may be setting the bar on that, several people interviewed by Industry Dive said.

"Data management and data science are competencies essential to our future business model," Duke's Butler said. "We have a team of data scientists that innovate on our data and a MAD (Machine learning, Artificial intelligence, Deep learning) Lab that brings technology to bear on big data problems."

Exelon's analytics team has increased from two people in 2015, when Hurst took over, to ten this year. "My team's role is to use data to make Exelon's vision of a connected community a reality," he said. "My personal concern is making Exelon a data driven organization, with people who know what data can do and how to find and use it to make decisions."

"Today, the question is how to drive efficiency and improve customer satisfaction, but the journey is about the utility's role in a connected community."

Brian Hurst

Utility Analytics lead, Exelon

It is also important to work with suppliers and vendors that understand the importance of digital transformation, Butler said.

"Finding the right external partners will be necessary," Moyer agreed. And utilities also need to work with regulators. "This technological revolution will require outside the box thinking by utilities and regulators, but utilities need to show that need to regulators."

An integrated, distributed network built on data analytics and communications technologies will eventually allow peer-to-peer energy transactions between businesses and residences on the network, Moyer said. But that transactive energy system will require more situational awareness and more decentralized control — and guardrails in utility control rooms will be necessary for the foreseeable future to protect against machine error and unintended consequences.

"Today, the question is how to drive efficiency and improve customer satisfaction, but the journey is about the utility's role in a connected community," Hurst said. "Enabling peer-to-peer transactions between customers generating and consuming energy will be a part of it. But the big question is how to make the connected community vision a reality. Data analytics is the enabler."